Since the inauguration of the Voltaire Foundation’s Besterman Lectures in 1997 by 18th-century historian Robert Darnton, the Enlightenment has been their concern. The Besterman Lecture of 2017, from which this essay is derived, announced a rather different focus, on digital computing in Enlightenment scholarship. It is characteristic of the technology that during the intervening six years it has progressed significantly and so raised new questions for scholars to consider. But it is equally characteristic that not as much has changed as may seem – plus ça change, plus c’est la même chose is never more than partially true – and some fundamentals have not changed at all. Reason is still the link between the preoccupation of the Century of Light and the original purpose of the machine, built to model ‘the capacity for rational thought’.1 With more than seventy years of its history in the arts and in the natural and human sciences, identifying the digital machine in this way may seem new, even odd from a utilitarian or technical perspective. But given the ongoing, noisy confusion over its implications for how we live our professional and private lives, going back to its origins and into the processes of thinking that the digital machine entails seems to me the best way of making sense of it as a work of human art for humane ends. Doing so raises in turn two practical questions: what fundamentals of reasoning do we need to consider as scholars when we set about implementing our specific projects? How might we expect its implementations to change what we do?

A warning: like the lecture, this essay is necessarily tentative and exploratory, both because digital computing, in the scheme of things, is young, and because the machine was designed for learning by modelling, about which more later. In describing his own work in an interview, the eminent Japanese roboticist Masahiro Mori compared himself to the dog in the Japanese folktale Hanasaka Jiisan, who runs out into an old couple’s garden sniffing the ground, then stops and barks insistently, ‘dig here!’ ‘dig here!’. The man digs and finds a hoard of gold coins. ‘I seem to have a good nose for sniffing out interesting things,’ Mori commented, ‘but I don’t have the skill to dig them up. That’s why I bark “Dig here!” and then other people will dig and find treasure’ (Kageki 2012, p.106). That’s what you can expect of me as well, to run about in our garden, stop, bark and hope that someone will dig.

1. To the deep end

In the epigraph to his first discussion of ‘distant reading’, literary historian Franco Moretti quotes Aron’s words from the libretto to Arnold Schönberg’s unfinished and quite unorthodox opera, Moses und Aron: ‘Meine Bestimmung, es schlechter zu sagen, / als ich es verstehe’ (‘My mission: to say it more simply than I understand it’; Schönberg 1963, act II, scene 5). Moretti strikes an implicit analogy: on the one hand two humanly comprehensible simplifications of the transcendent: Aron’s Golden Calf and computational results on the one hand; on the other, two rich complexities beyond comprehension, namely the idea of the divine and the whole of literature. Between them is the negotiator. Leibniz, in Grundriss eines Bedenkens von Aufrichtung einer Societät (1671), saw the relationship somewhat differently: not an external drama but ‘Theoricos Empiricis felici connubio konjungiert, von einem des andern Mängel supplieret’: an ongoing internal negotiation within a happy marriage joining theoreticians and empirics (Leibniz 1958, p.14). This relationship, between ‘the dirt and the word’, as Emily Vermeule once said of archaeology and philology (Vermeule 1996), is where the promise of digital computing unfolds. My aim here is to question and explore that promise.

To my mind the negotiating is central: it shifts attention from the fact that computing and the humanities have intersected to the actions we take in the intersection. Leibniz’s metaphor of negotiation, the felix conubio, is a provocative one, for in a relationship of that kind, each partner affects the other and is in turn affected; in a manner of speaking they co-evolve. So also the developmental spiral in the history of technology, from invention to assimilation to new invention. The traffic between scholarship and computing is usually conceived and followed as if it were a one-way street. That is wrong: it’s a metamorphic recursion.

Perhaps Leibniz – or perhaps Voltaire? – was the last in the West to be capable of truly encyclopaedic knowledge. Undoubtedly the many specialisms into which the whole range of their concerns has branched, and branched again and again, are affected by the digital machine. But I want to argue for more than the effect of the one on the other. I want to argue for the intellectual relevance of digital computing and scholarship to each other. Both are undervalued: computing by scholars who regard the machine as merely a tool of correspondence, access and publication; the artefacts and questions of scholarship by technical practitioners who see only data and algorithms. On the one hand, new tools change the questions you can ask as well as the volume of material you can interrogate and may bring about a shift in perspective. Neurocognitive scientist Michael Anderson puts it this way: when we invent scales, rulers, clocks, and other measuring devices, along with the specific practices necessary for using them, we are not merely doing better with tools what we were doing all along in perception. Rather, we are constructing new properties to perceive in the world […] properties that actually require these tools to perceive them accurately (Anderson 2014, pp.181–82) – or perhaps, as I wrote recently, to perceive them at all (McCarty 2022, p.251). On the other hand, digitalisation of the artefacts and questions of the literae humaniores into algorithmic form, as problematic as that may seem, requires scholarly understanding to get it right, and so techniques and software evolve. Should it be a surprise that as the general-purpose machine encounters the complex and subtle data of these literae that our demands on it grow, software techniques change, data expands and the machine itself is improved?

Thus my mission, if you will: to summon, to invite, to entice as many bearers of grain from as many silos of the humanities and arts as possible, including those that have been around for long before the digital machine was invented, so that our understanding of what we might do with the machine does not suffer from starvation of the mind and spirit. In a nutshell, this understanding shows us that the machine is not just an obedient servant to our whims, thoughts and questions. By its resistance to us as well as by its enabling amplification of our imaginative capacities, it has the potential to become a powerful companion with which to reason, to question and perhaps, someday, with whom to converse. I am not making claims about the future, only advising that the history of making absolute distinctions does not encourage the practice.

Allow me to enlarge the point. If the computer were only a platform for useful applications – a knowledge jukebox, filing system, communications platform, digital typewriter and the like – then there would be much less for me to talk about. As ubiquitous and central to our work as these applications are, as much as they affect us, they are not my subject here, nor are the genuine accomplishments of digital scholarship. These are growing in number and easy to find – as long as you know where to look and are prepared to separate the wheat from the chaff.

Later I shall return to the use of the machine for straightforward access to stuff. But throughout this essay my emphasis will be on the enlightening cognitive resistance just raised, and on a kind of problematic liberation inherent to the machine from its beginnings. I want to persuade you that very quickly such enquiry leads to the deep end, in which the older disciplines have from their beginnings been swimming and teaching others to swim.

2. Machines to think with

But since this essay is for the inaugural issue of Digital Enlightenment Studies, I must at least begin with a class of examples relevant to it and to the Voltaire Foundation’s ambitious editorial project.

In his essay ‘Editing as a theoretical pursuit’, Jerome McGann cites a number of remarkable scholarly editions emergent, as he says, ‘under a digital horizon [… prophesying] an electronic existence for themselves’. These codices, he continues, ‘comprise our age’s incunabula, books in winding sheets rather than swaddling clothes. At once very beautiful and very ugly, fascinating and tedious, these books drive the resources of the codex to its limits and beyond’ (McGann 2001, p.79). Hence the urgent dream of the digital edition, with its many TEI-encoded approximations. I do not want to seem to belittle or arrogantly to correct the industry of scholarship producing these editions. Rather I want to talk about this industry’s ‘theoretical pursuit’ from a theoretical perspective relevant to all the disciplines of the arts and letters (for which I have co-opted the term literae humaniores). Again, my subject is the potential of the digital medium to respond to the emergent demands not only of the critical edition but also to all other scholarly forms of expression.

The critic I. A. Richards, who helped establish English literary studies at Cambridge, began his book Principles of Literary Criticism with the assertion that ‘A book is a machine to think with’ (Richards 1924, p.1). He compared his book ‘to a loom’ – perhaps not coincidentally suggesting Jacquard’s machine, the control mechanisms of which Charles Babbage adopted for his Analytical Engine in 1836 (Essinger 2004; Riskin 2016; Pratt 1987, pp.113–25). In Lady Ada Lovelace’s words, ‘the Analytical Engine weaves algebraic patterns just as the Jacquard loom weaves flowers and leaves’ (Lovelace 1843, p.696).

With the digital edition in mind, I want to put you in mind of how the design of any such ‘machine to think with’, from the cuneiform tablets of Babylonia and the papyrus scrolls of Alexandria to the paperback, shapes the thinker’s cognitive paths. Consider these six examples:

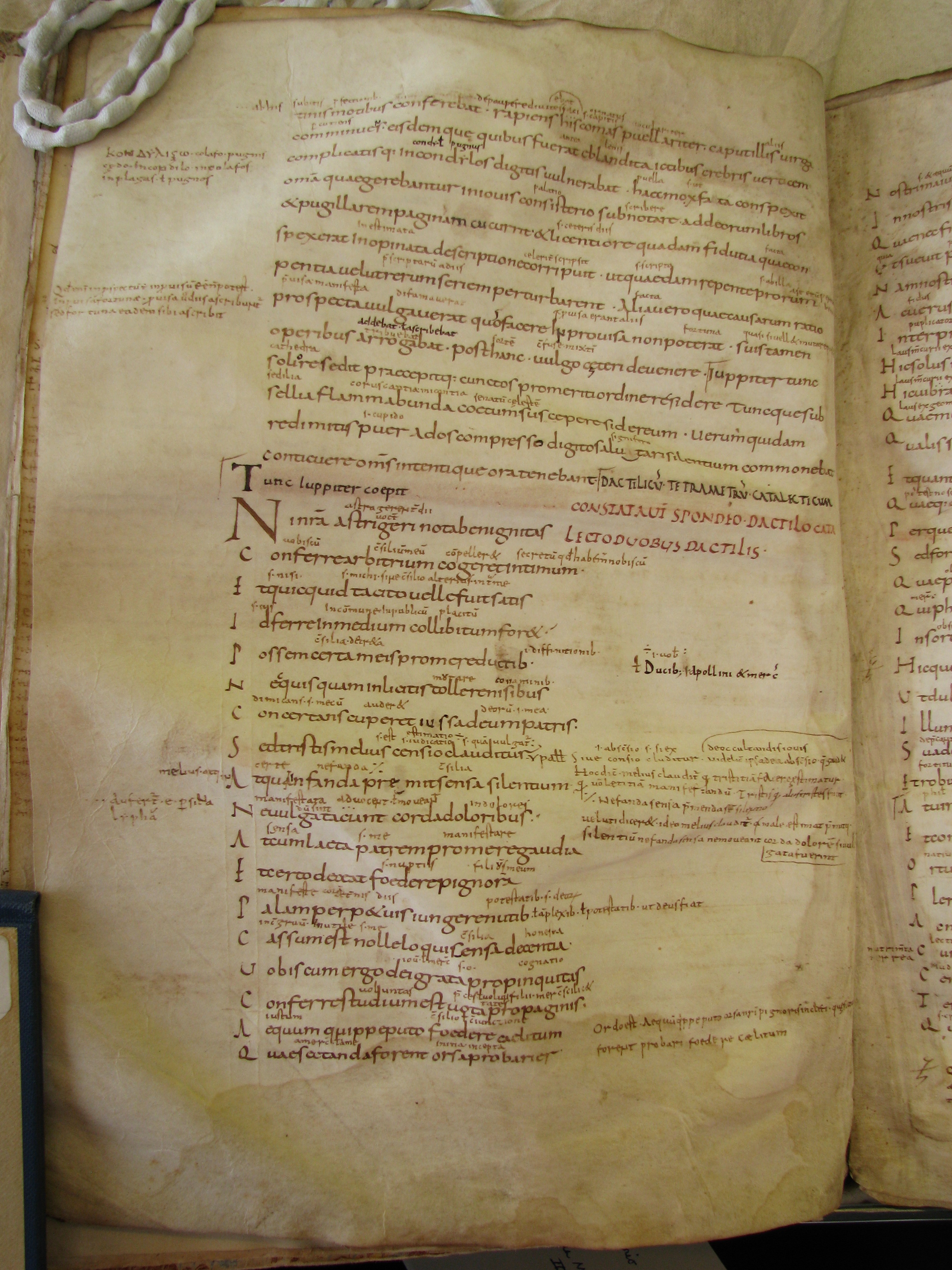

The 9th-century glossed manuscript of Martianus Capella’s Late Antique work De nuptiis (On the marriage of Philology and Mercury), in which the glosses weave together traditional authorities and commentary with the 5th-century text. Glosses here and elsewhere not only clarify; sometimes they obscure by encryption, word-play, puzzles, allegories and etymologies, paradoxically revealing by concealing. The reader’s path is often, purposefully, an intricate maze (Figure 1; see O’Sullivan 2012).

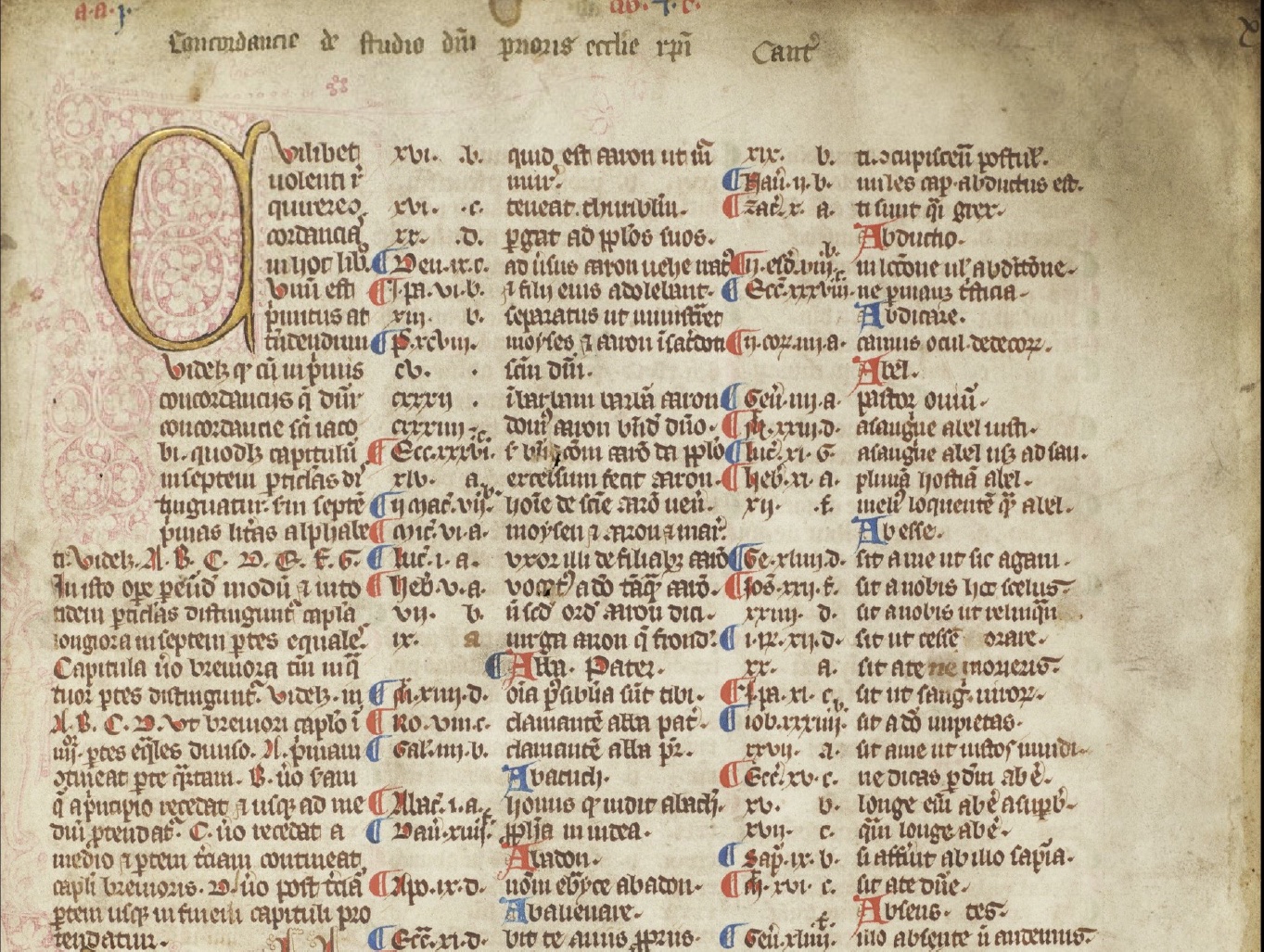

A verbal concordance from the late Middle Ages. This form of reference was invented at the monastery of St Jacques in Paris in the late 12th or early 13th century. It directs the enquirer from a given word to all those passages of the Vulgate where it is attested; reading is to some degree randomised as well as directed (Figure 2; see Rouse and Rouse 1991, ch. 7; Rouse and Rouse 1974).

An early 20th-century English Bible (Authorised or King James Version), in a format pioneered by C. I. Scofield, hence the Scofield Reference Bible (Scofield 1917), named by its publisher the Old Scofield Study Bible, still in print. Note the central column on each page linking passages, both within each Testament and between them, in a system Scofield called ‘connected topical references’. These references, however, have their origin in the ancient tradition of typological interpretation of Scripture, following the principle articulated by St Augustine: ‘quamquam et in Vetere Nouum lateat, et in Nouo Vetus pateat’ (Quaest. in Exodo 73): ‘in the Old the New is concealed, and in the New the Old is revealed’ (Figure 3; for typology see Auerbach 1984, orig.1944).

A contemporary, computer-generated keyword-in-context (KWIC) concordance, the format of which forestalls reading, redirecting attention from the centred word to its nearest neighbours left and right (Figure 4). It is the basis for the field of Corpus Linguistics, according to linguist J. R. Firth’s dictum, ‘You shall know a word by the company it keeps!’ (Firth 1957, p.11) – a version of the proverb ‘noscitur e sociis’ (‘he is known by the company he keeps’).

Ramón Llull’s 14th-century paper machine, designed to engage the reader combinatorially in correlating the terms of a ‘spiritual logic’ (Figure 5; see Johnston 1987).

One of Herman Goldstine’s and John von Neumann’s diagrams to illustrate the logical flow of automated reasoning during operation of a stored-program digital computer (Figure 6; Goldstine and von Neumann 1947; see Dumit 2016). More about that later.

My point is phenomenological and applies to the whole history of verbal communication: that the medium mediates; that tools shape the thoughts and actions of the person using them, and themselves embody what philosopher Davis Baird has called ‘thing knowledge’ (Baird 2004). The question for us is how the ‘thing knowledge’ of the digital machine does it differently compared to other media.

Flow diagram of coding for a digital computer. Goldstine and von Neumann 1947, p.12. By permission of the Institute for Advanced Study, Princeton NJ.

3. Modelling

The best way to begin opening up this difference is with the machine’s primary conceptual primitive: iteratively to manipulate a software model, or formal representation of whatever is to be computed and how this computing is to proceed (see McCarty 2019).

Decades ago, models were devised by hand before or during a programmer’s work. In the arts and humanities, prior to standard applications (now ‘apps’), modelling and programming were often collaborative between scholar and programmer. The value of the collaboration gave rise to the persistent idea of programming as an essential skill for scholars to learn. Some centres and departments, such as the Institut für Digital Humanities in Cologne, now teach programming to undergraduates and postgraduates in the humanities (Geisteswissenschaften).

In artificial intelligence research and its applications to major public services (e.g. searching the web), models are in effect designed and built by independent ‘machine learning’ techniques that identify statistical patterns in very large collections of online data. The impressive success of AI software, for example GPT4 and ChatGPT, is due to the surprisingly high degree of repetition in all human behaviour. I say ‘impressive’ with care, however: what we get from the machine is the product of assembling at speed what people ordinarily write in the ways they ordinarily write it, hence necessarily limited to the performances of a ‘stochastic parrot’ (Bender et al. 2021). Many questions follow – for example about plagiarism, the force of canonised habit and, most worrying of all, ‘surveillance capitalism’ (Zuboff 2019) and surveillance generally (Cover 2015, chapter 8) that ‘Big Data’ empowers. I regret not having space here to discuss these problems.

There are two kinds of models, anthropologist Clifford Geertz has suggested: models of something in order to develop one’s understanding of it, and for something imagined or known only from fragmentary evidence (Geertz 1973, pp.93–94). In both cases they involve a three-way relation between the modelled object, the model and the modeller (Minsky 1968, p.426). This relation is clear in modelling done by hand, though the presence of the modeller, hence the subjectivity of modelling, is often overlooked. In stochastic modelling, the place of the modeller is taken in the first instance by the machine, but in the second by the human statisticians and programmers, though who does what cannot be reconstructed because of the speed and contingency of data with which the modelling is done. The model is likewise dynamic and evolving. The modelled object is whatever the Big Data approximate: for example, the entirety of contemporary online writing in whatever language is of interest.

Modelling by hand, for example of a large and variegated textual edition, runs into all the problems an editor would expect, that is, of the explicitness and consistency of the categories that define the entities of the edition. In digital terms, one encounters more or less the same situation: objects of interest must be represented in a completely explicit and absolutely consistent manner. Fudges that might be accommodated in a hand-made edition thus present a problem for the digital editor, serious to the degree that the chosen objects require interpretation. Literary objects, such as metaphors, allusions, personifications and the like range from difficult to impossible.

Modelling is thus not strictly mimetic of an object in the real world, nor an imagined one, because both require the object’s translator (traduttore) to render it into binary terms and to choose what is to be included – hence rigorously to compromise (traditore). In return for such sacrifices of truth, the modeller receives enormous combinatorial, manipulatory power over the modelled object or idea, as well as the ability to communicate it rapidly and widely. Thus the essential tension of the digital trade-off, between mimetic fidelity and computational power.

4. Modelling of

Let me give three illustrative examples, in the form of thought experiments, one art-historical, two literary.

The art-historical example requires homework. Using ordinary skills for searching online, assemble images from the story of Icarus’ fall in Ovid’s Metamorphoses painted by Pieter Bruegel the Elder (1558), Joos de Mompe (1565), Vlaho Bukovic (1897), Herbert Draper (1898), Henri Matisse (1947), Marc Chagall (1975), Joseph Santore (2013–2015) and Briana Angelakis (2015).

From a strictly computational point of view, the problem is deriving a rigorous set of criteria by which an algorithm could discover all of these paintings without the help of their titles, or if it had those titles, how it could qualify their variations on Ovid’s literary theme. As Marina Warner wrote recently, when the facts are missing we tell stories: ‘The speculative mind generates experience – imagined experience’ (Warner 2017, p.37). The machine has only the data to go on. The data can, of course, include our choices, and if there are enough of us whose choices are available, produce fairly reliable results. But I am excluding that possibility for purposes of argument.

The second example involves identifying the narrator of the last chapter of Barbara Kingsolver’s novel, The Poisonwood Bible, which tells the story of an American family taken to what was then the Belgian Congo by the evangelical father, a fiery Baptist. Each chapter is narrated by a member of the family (excluding the father); the narrator’s name is the title of the chapter – except for the last chapter, which has no title, hence no digitally unambiguous identity. The reader figures out whose voice it is by a complex inference: that the narrator must be the dead child Ruth May, or rather the being to whom she reverted, according to local belief, when she died: the nameless and all-seeing muntu, who encompasses all people born, unborn and dead, as her sister Adah explains at the beginning of a previous chapter. The problem is how to follow that inference algorithmically, not to the name ‘Ruth May’ but to the muntu whom Ruth May once instantiated – or so I as a reader have inferred in the welcome absence of an authorial statement. One might say, as in the previous example, that were enough readers’ pronouncements available, an algorithm could reach the same conclusion by proxy. But consider: would this explicit naming be identical to the reader’s unspoken mental event?

The third example concerns allusion in Seamus Heaney’s poem ‘The Railway Children’. (My advice is to stop reading now, locate the poem online or on your shelves, read it and return here.) The problem is what an algorithm might do with the last six words, ‘through the eye of a needle’. Anyone familiar with the parable of the rich man in the Synoptic Gospels will recognise and follow the allusion to the source, make the connection and experience, shall we say, a metanoia. But again consider the difference between what happens poetically in the mind when an allusion is recognised but not explicitly labelled, and what happens when it is delivered as a computed result.

In all three cases we are left with a return to the drawing board and a number of questions. These spur us to ask first how we know what we know, then perhaps to ponder whether we have the right idea of what the machine is for.

But let me return to the crucial implications of that which digital computation requires: (1) if prepared by hand, that real-world objects be labelled in a completely explicit and absolutely consistent manner, or (2) if statistically determined, that the resultant meaning or identity be accepted as the majority agreement of those who have commented. For (1), experience speaks clearly, that to the degree that interpretation is required, compromise after compromise demonstrates that supposed objectivity is an illusion, however much convenience is gained in communicating and consulting the scholarly work concerned. That convenience is not to be gainsaid, but it comes at a cost. For (2), the gain of providing and enjoying worldwide access is the same, and where the vox populi is available online, one might then have a reliable sampling of current views. But by the very processes of statistical computation, one would not be able to trace computed judgments to their individual sources.

To my mind the nub of the matter is what happens to the Leibnizian negotiation within the felix conubio of scholar and source, whether the scholar is acting as an editor of the source or as a reader. I am fond of pointing to the analogy of experimental science as developed, for example, by historian David Gooding, who in his life-long study of the 19th-century scientist Michael Faraday’s meticulously detailed laboratory notebooks, attempted to recreate what Faraday did (Gooding 1990). His interest was in the ways in which Faraday obtained new knowledge of the world from the behaviour of his experimental apparatus. Gooding (2003, Figure 13.4) sketches the process of modelling he describes in terms of that apparatus, immediately translatable into the computational situation, which he understood well.

My experience in constructing An Analytical Onomasticon to the Metamorphoses of Ovid (McCarty 2022) convinced me that modelling is analogously experimental and that it offers a potentially effective way of gaining new insights into scholarly sources. As an historian of science, Gooding spoke of obtaining new knowledge about the world through construals of what the experimenter observes, as he wrote, the ‘flexible, quasi-linguistic messengers between the perceptual and the conceptual’ (Gooding 1986, p.208). These become provisional scientific knowledge that is then challenged, tested and, if successful, accepted. As a scholar in the humanities, I prefer to speak of insights from such construals.

In the scheme of things, computing in the humanities is very young. Much needs to be done. We need, for example, to marshal the work of many decades on human-computer interaction, rethinking interaction especially in its attention to performance – ‘computing as theatre’, to quote the title of Brenda Laurel’s book (2014). Taking my analogy to experimental work in the laboratory sciences, we need to pay attention to the cognitive psychological aspects of writings by Gooding, Ryan Tweeny, Nancy Nersessian and many others. Phenomenology should come into play. If we do all that, we will be far better equipped to design digital critical editions.

Allow me to put to one side my ignorance and naivety as an amateur in these fields (literally, a lover of what they do but no specialist) to insist on one thing: that we never let slip from sight the constraints – the trade-offs – of digital representation and processing. Some would say, and in fact do say, that the genius of the digital is that it renders the digital irrelevant, as it is when we listen to digitally recorded music. For research uses, I think that Aden Evens, in The Logic of the Digital (Evens 2015), is basically right: that the discrete, all-or-none quality of the digital runs all the way from hardware circuitry to user-interface and to the resources the scholar uses. I conclude that to ignore the digitality of the digital is to obscure the foil against which our reasoning struggles and is perhaps transformed when we do the kind of work that I am describing, not digital editions only but all scholarly applications of the machine.

I have just suggested a very big question that I haven’t thought enough about, namely reasoning’s changes from prolonged exposure to the machine. But I will dare to guess that there are four aspects of computing’s influence to consider. One is defined by that foil to reasoning. Another has to do with the effects of googling on the conventions of normal academic discourse.2 The last two aspects, the combinatorial and simulative powers of the machine, take up the remainder of this essay.

5. How it works

Many have said, following Lady Lovelace’s dictum, that the machine can only do what it is told to do (Lovelace 1843, p.722), or in the anxious language of the mid-20th century, the computer is but a ‘fast moron’. IBM made this slur into doctrine in the 1950s to salve public fears of artificial intelligence sparked by the machine’s highly publicised winning at draughts (checkers) and chess; the mantra then spread (McCorduck 1979, pp.159, 173; Armer 1963). But IBM’s ‘fast moron’ is not the machine we have, which in essential respects is the one Herman Goldstine and John von Neumann addressed in the first-ever paper on programming (Goldstine and von Neumann 1947). They pointed out that the difference in design that makes the crucial difference is the provision that allows a running program, conditional on the outcome of previous operations, to deviate from the linear sequence of instructions or to rewrite those instructions on the fly (noted by Lovelace 1843, p.675). One of Goldstine and von Neumann’s ‘flow diagrams’, shown in Figure 6, illustrates the point. They explained that coding ‘is not a static process of translation, but rather the technique of providing a dynamic background to control the automatic evolution of a meaning’ as the machine follows unspecified routes in unspecified ways in order to accomplish specified tasks (Goldstine and von Neumann 1947, p.2, emphasis mine). Thus, Herbert Simon: ‘This statement – that computers can only do what they are programmed to do – is intuitively obvious, indubitably true, and supports none of the implications that are commonly drawn from it’ (Simon 1960; see also Feigenbaum and Feldman 1963, pp.3–4). The idea to which Simon refers is, as Marvin Minsky remarked, ‘precomputational’ (McCorduck 1979, p.71).

Goldstine and von Neumann go on: the high level of complications that result from this design are ‘not hypothetical or exceptional… they are indeed the norm’; the power of the machine ‘is essentially due to them, i.e. to the extensive combinatorial possibilities which they indicate’ (1947, p.2). In essence, as von Neumann suggested four years later, machines ‘of the digital, all-or-none type’ work by combining and recombining the data under given constraints (von Neumann 1951, p.16). In a nutshell, then, the value-added at this stage is combinatorial.

For an analogy take what happens in a research library, which provides a large number of modular resources in a standard format so that a variety of readers with unforeseen purposes may combine and recombine them ad lib. (We have had such a device at least since the Library of Ashurbanipal, in the 7th century bce, if I am not mistaken.) On a larger scale, in more recent form, we see more or less the same with the web; on a smaller scale with a single codex, particularly obvious when it is designed as a reference work, such as a critical edition built to foster recombinatorial liberties. (The works of Voltaire and Leibniz come to mind as fit corpora for such treatment.) But my point is the familiarity of this way of working, though now by the perhaps unfamiliar means of statistical tools for finding patterns in masses of data. Once again, distant reading is the most obvious example. Surprises from the process begin to emerge, Alan Turing suggested in 1950 (by analogy to the critical mass of a nuclear reaction) at a quantitative threshold of complexity (Turing 1950, p.454). In digital humanities, we find cogent surprises from stylometric analysis as well as from distant reading. See, for example, the work of John Burrows (Burrows 2010) and the pamphlets of the Stanford Literary Lab.3

6. Modelling-for and simulation

The last aspect of reasoning with the machine that I will consider begins with synthetic modelling for. Modelling-for is commonplace in engineering as a way of converging on an optimal design, for example of an airplane wing. In historical or archaeological research, its aim is to create a simulacrum of a phenomenon that no longer exists, in whole or in part, from whatever evidence is at hand and whatever reliable conjectures may be possible. Examples are the Roman Forum and the Theatre of Pompey in Rome, of which little visible remains. These have been reconstructed visually by such modelling.4 Modelling-for can also be used in the manner of a thought experiment to speculate about what might be or might have been.

Modelling-for blurs into simulation when the model is, as it were, turned loose to see what can be learned from it, from interacting with it and changing its parameters. Simulation is used to study phenomena that are difficult or impossible to observe or, as the historian of the U.S. Manhattan Project wrote, ‘too far from the course of ordinary terrestrial experience to be grasped immediately or easily’ (Hawkins 1946, p.76). The physicists of Los Alamos reached beyond their terrestrial experience by simulating the random behaviour of neutrons in a nuclear chain-reaction, using the so-called Monte Carlo technique (Galison 1996). Closer to our own interests (to cite two examples, one recent, the other old), linguists have used simulation to study the migration of dialects (Kretzschmar and Juuso 2014) and naval historians to approximate the course of a battle at sea (Smith 1967, p.20).

In the physical sciences, including climatology, simulation was just about coeval with the invention of digital computing machinery, indeed these sciences were a driving force in its earliest development (McCarty 2019). Work in economics and other social sciences followed soon after. Most of us have forgotten that there is a rich and valuable history of simulation in the creative arts from the 1950s through the 1970s, of possible worlds and of works of art co-created in interaction with the viewer (Feran and Fisher 2017). There is, in other words, a long and complex tradition of modelling of the more imaginative, less mimetic kind that offers us lessons in risk-taking and its rewards, from which we have much to learn.

I want to conclude by giving you some idea of the limb out on which I now invite you to climb for the view of new things. The philosopher Paul Humphreys argues that in going out on that limb with computing, beyond what we can do otherwise, scientific epistemology ceases to be human epistemology (Humphreys 2004, p.8). I think that David Gooding is right, and that the humanness of our knowledge is a matter of what we do with what we learn in extenso, from what we could not otherwise reach.

Before I conclude with an example illustrating what I mean by that, with keen awareness of how nervous we are apt to get about venturing out or up beyond ground we take to be solid, allow me to recommend two bracing touchstones: first, American essayist Elaine Scarry’s 1992 article, ‘The made-up and the made-real’; second, part B of Canadian philosopher Ian Hacking’s 1983 book, Representing and Intervening. Experts in these areas will have more recent items to recommend, I trust, but these two (as well as several others that I forbid myself from mentioning) mark important moments of light on my subject.

7. Extending ourselves

Elsewhere I have argued that it is far more useful and true to the practice of simulation – that is, to the use of the machine to reach for knowledge ‘too far from the course of ordinary terrestrial experience’ – to regard the machine not merely as a tool for pushing back ‘the fence of the law’, to adapt Jacob Bronowski’s characterisation of science (Bronowski 1978, p.59), nor merely as a tool for adventurous play, but as a prosthesis for the imagination that ranges along Elaine Scarry’s spectrum from ‘making-up to making-real’. I have suggested that at least from the perspective of the disciplines of making, simulation is the essential genius of the machine, and so what we must understand about it and learn to employ. I have suggested that simulation is a tool for restructuring our experience of the world, that as anthropologist Jadran Mimica says of the combinatorial ethnomathematics of the Iqwaye people, it is mythopoetic (Mimica 1988, p.5). Other recent work in ethnomathematics and in the history of combinatorics suggests that cosmological world-making by combining and recombining units of experience, such as the digits we are born with, is as close to a universal language ‘after Babel’ as we are likely to get (Ascher 2002; Wilson and Watkins 2013, part II). Leibniz’s calculemus! and our own combinatorics belong in this world-wide tradition.

Fascinating (isn’t it?) that the device from which we have so often expected closure turns out to be so paradoxically a tool that does the opposite.

Historian of biology Evelyn Fox Keller has cautioned us, noting that simulation changes, sometimes radically, from discipline to discipline (Keller 2003). I have argued for its continuities across disciplines so as to project it where it has not yet made much headway. What then might it look like in the disciplines of the humanities?

For a suggestive answer, the best I know, I turn to John Wall’s Virtual Paul’s Cross Project5 and Virtual St Paul’s Cathedral.6 These are, respectively, visual and auditory simulations of John Donne’s Gunpowder Day sermon at Paul’s Cross, 5 November 1622, and services in the cathedral, with supporting visualisations. As we know, both the medieval St Paul’s Cathedral and the Paul’s Cross preaching station within its grounds were destroyed, together with the surrounding buildings in the Great Fire of 1666. So, in simulating the acoustics there is much room for conjecture.

Wall’s aim is to explore early-modern preaching through performance by simulating all of what Donne’s congregation could be reasonably expected to have heard. Wall and a number of other early modernists argue that the sermons of the time were their performances, the texts we have merely traces of them. Consider the argument by analogy: would we mistake the score of the Goldberg Variations for Bach’s music, or the script of Tennessee Williams’s A Streetcar Named Desire for the play? Of course not; we would know immediately that they are instructions for interpretative performances of the music and the drama respectively, just as we ought to think of programming code as instructions for a different sort of interpretative performance. But we are so accustomed to silent reading that we are likely to regard the text of, say, Donne’s ‘Goodfriday 1613. Riding westward’ as the primary object, and so, by extension, to overlook that for the sermon, in St Paul’s words, ‘faith comes by hearing’ (Romans 10:17).

Social cohesion and political unity come also. Paul’s Cross sermons were composed ex tempore from notes by a preacher who ‘faced a congregation gathered in the open air and surrounded by a host of distractions, including the birds, the dogs, the horses, the bells, and each other’ (Wall 2014). Dramatic engagement was essential to their success. What do we need, as scholars, in order to get closer to the sermon as it was?

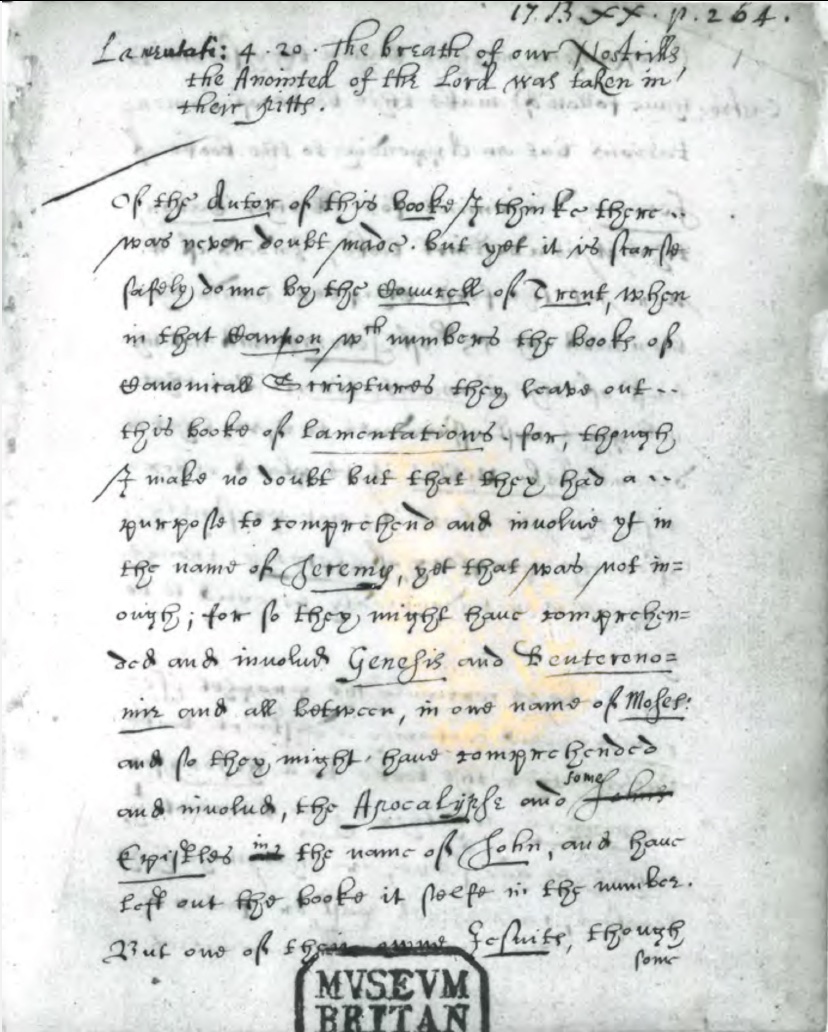

Near-contemporary evidence of Donne’s sermon was confined to that first printed edition of 1649 until in 1995 Jeanne Shami identified British Library manuscript Royal 17 B XX as ‘a scribal presentation copy […] corrected in Donne’s own hand’ (2007, p.77; see Figure 7). This is very likely the manuscript that was produced at the request of James I from Donne’s notes, hours, days or weeks after the event. Twenty-seven years passed before the text was printed. The Great Fire destroyed almost everything – but not absolutely everything – relevant to the reconstruction just seventeen years after that.

So what is going on? The project, Wall has written, is ‘about what we are doing when we believe we have discovered, from our experience with a digital environment, things about past events that are not documented by traditional sources’ (Wall 2016, p.283). Indeed, what are we doing? This is the brink of the new – or is it so very new? One may wish to retreat to safer ground, but I wonder just how safe that ground is when one looks closely, how much we build, inferential step by inferential step. And is safety our goal? Universally true ‘positive knowledge’ of the sort that latter-day positivists seek is ephemeral. The Kuhnian model of incommensurable paradigms goes to the opposite pole. I would argue for Peter Galison’s ‘trading zones’ between partially coordinated, thinly described scientific practices (Galison 2010) and point again to Hacking. We must, of course, be scrupulously careful and cautious. Imagination is a wild beast.

My hope for such projects as Wall’s is that someday soon they can become dynamic simulations in which you and I can twiddle the knobs and see what happens if this or that conjecture is altered. Serio ludere, seriously to play – and play imaginatively – is what our machine is all about. Only a few can do that now, only in their labs and only quite slowly. And I very much hope that they, as they work, are, like Faraday, keeping laboratory notebooks so that we can begin to follow in David Gooding’s footsteps. To do that we need a much wider, more institutionally committed gathering of the disciplinary tribes. In my book that’s what digital humanities is for.

Notes

- Oxford English Dictionary, s.v. ‘reason, n.¹, sense II.5.a’, https://doi.org/10.1093/OED/5117557630. I do not consider the question of artificial intelligence explicitly here; for that see McCarty (forthcoming, 2024). [^]

- Richard Rorty, who writes well about this, has suggested a Gadamerian shift from metaphors of depth to metaphors of breadth, from probing one thing deeply to assembling and comparing many things (Rorty 2004). But I shall say no more about that here. [^]

- https://litlab.stanford.edu/pamphlets/. [^]

- E.g. http://dlib.etc.ucla.edu/projects/Forum/. [^]

- https://vpcross.chass.ncsu.edu. [^]

- https://vpcathedral.chass.ncsu.edu. [^]

References

Anderson M. L. 2014. After Phrenology: Neural Reuse and the Interactive Brain. Cambridge MA: MIT Press.

Armer P. 1963. ‘Attitudes toward intelligent machines’. In: Feigenbaum E. A., Feldman J. (eds) Computers and Thought. New York: McGraw-Hill, 389–405.

Ascher M. 2002. Mathematics Elsewhere: An Exploration of Ideas Across Cultures. Princeton NJ: Princeton University Press.

Auerbach E. 1984. ‘Figura’. Mannheim R. (trans.). In: Scenes from the Drama of European Literature. Theory and History of Literature 9. Minneapolis MN: University of Minnesota Press, 11–76.

Baird D. 2004. Thing Knowledge: A Philosophy of Scientific Instruments. Berkeley CA: University of California Press.

Bender E. M. et al. 2021. ‘On the dangers of stochastic parrots: can language models be too big?’ In: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 610–23. https://doi.org/10.1145/3442188.3445922.

Bronowski J. 1978. ‘Knowledge as algorithm and metaphor’. In: The Origins of Knowledge and Imagination. Silliman Memorial Lecture, Yale University. New Haven CT: Yale University Press, 41–63.

Burrows J. 2010. ‘Never say always again: reflections on the numbers game’. In: McCarty W. (ed.) Text and Genre in Reconstruction: Effects of Digitalization on Ideas, Behaviour, Products and Institutions. Cambridge: Open Book Publishers, 13–16.

Cover, R. 2015. Digital Identities: Creating and Communicating the Online Self, Cambridge MA: Academic Press.

Dumit J. 2016. ‘Plastic diagrams: circuits in the brain and how they got there’. In: Bates D. and Bassiri N. (eds) Plasticity and Pathology: On the Formation of the Neural Subject. Berkeley CA: Townsend Center for the Humanities, University of California; New York: Fordham University Press.

Essinger, J. 2004. Jacquard's Web: How a Hand Loom Led to the Birth of the Information Age. Oxford: Oxford University Press.

Evens A. 2015. The Logic of the Digital. London: Bloomsbury.

Feigenbaum E. A. and Feldman J. (eds) 1963. Computers and Thought. New York: McGraw-Hill.

Feran B. and Fisher E. (eds) 2017. The Experimental Generation. Special issue of Interdisciplinary Science Reviews 42:1–2.

Firth J. R. 1957. ‘A synopsis of linguistic theory, 1930–1955’. In: Studies in Linguistic Analysis. Oxford: Basil Blackwell, 1–32.

Galison P. 1996. ‘Computer simulations and the trading zone’. In: Galison P. and Stump D. J. (eds) The Disunity of Science: Boundaries, Contexts, and Power. Stanford CA: Stanford University Press, 118–57.

Galison P. 2010. ‘Trading with the enemy’. In: Gorman M. E. (ed.) Trading Zones and Interactional Expertise: Creating New Kinds of Collaboration. Cambridge MA: MIT Press, 25–52.

Geertz C. 1973. The Interpretation of Cultures. New York: Basic Books.

Goldstine H. H. and von Neumann J. 1947. Planning and Coding of Problems for an Electronic Computing Instrument. Report on the Mathematical and Logical Aspects of an Electronic Computing Instrument, part II, vol.1–3. Princeton NJ: Institute for Advanced Study. https://www.ias.edu/sites/default/files/library/pdfs/ecp/planningcodingof0103inst.pdf.

Gooding D. 1986. ‘How do scientists reach agreement about novel observations?’ In: Studies in the History and Philosophy of Science 17:2, 205–30.

Gooding D. 1990. Experiment and the Making of Meaning. Dordrecht: Kluwer.

Gooding D. 2003. ‘Varying the cognitive span: experimentation, visualization, and computation’. In: Radder H. (ed.) The Philosophy of Scientific Experimentation. Pittsburgh PA: University of Pittsburgh Press, 255–84.

Hacking I. 1983. Representing and Intervening: Introductory Topics in the Philosophy of Natural Science. Cambridge: Cambridge University Press.

Hawkins D. 1946. Inception until August 1945. Manhattan District History. Project Y. The Los Alamos Project 1. Los Alamos NM: Los Alamos Scientific Laboratory. http://www.osti.gov/manhattan-project-history/Resources/library.htm.

Humphreys P. 2004. Extending Ourselves: Computational Science, Empiricism, and Scientific Method. Oxford: Oxford University Press.

Johnston M. D. 1987. The Spiritual Logic of Ramon Llull. Oxford: Clarendon Press.

Kageki N. 2012. ‘An uncanny mind’. In: IEEE Robotics and Automation Magazine. June: 112, 106, 108. https://www.nxtbook.com/nxtbooks/ieee/roboticsautomation_june2012/index.php?startid=2#/p/112.

Keller E. F. 2003. ‘Models, simulation, and “computer experiments” ’. In: Radder H. (ed.) The Philosophy of Scientific Experimentation. Pittsburgh PA: Pittsburgh University Press, 198–215.

Kretzschmar W. A. Jr. and Juuso I. 2014. ‘Simulation of the complex system of speech interaction: digital visualizations. In: Literary and Linguistic Computing 29:3, 432–42.

Laurel B. 2014. Computers as Theatre. 2nd edn. Upper Saddle River NJ: Addison-Wesley.

Leibniz G. W. 1958. Grundriss eines Bedenkens von Aufrichtung einer Societät. In: Gerhard Krüger (ed.) Leibniz: Die Hauptwerke. Stuttgart: Alfred Kröner Verlag, 3–17.

Lovelace A. 1843. Translator’s notes to Menabrea L. F. ‘Sketch of the Analytical Engine invented by Charles Babbage Esq.’. In: Taylor R. (ed.) Scientific Memoirs, Selected from The Transactions of Foreign Academies of Science and Learned Societies, and from Foreign Journals 3. London: Richard and John E. Taylor, 666–731.

McCarty W. 2019. ‘Modelling the actual, simulating the possible’. In: Flanders J. and Jannidis F. (eds) The Shape of Data in Digital Humanities. London: Ashgate, 264–84.

McCarty W. 2022. ‘The Analytical Onomasticon Project: an auto-ethnographic vignette’. In: Textual Cultures 15:1, 44–52. https://muse.jhu.edu/article/867235.

McCarty W. 2024 (forthcoming). ‘Steps towards a therapeutic artificial intelligence’. In: Science in the Forest, Science in the Past III. Interdisciplinary Science Reviews 49:1.

McCorduck P. 1979. Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence. San Francisco CA: W. H. Freeman and Company.

McGann J. 2001. Radiant Textuality: Literature after the World Wide Web. New York: Palgrave.

Mimica J. 1988. Intimations of Infinity: The Cultural Meanings of the Iqwaye Counting and Number System. Oxford: Berg.

Minsky M. 1968. ‘Matter, mind, and models’. In: Minsky M. (ed.) Semantic Information Processing. Cambridge MA: MIT Press, 425–32. http://groups.csail.mit.edu/medg/people/doyle/gallery/minsky/mmm.html.

Moretti F. 2000. ‘Conjectures on world literature’. In: New Left Review 1, 54–68.

Morgan M. S. 2012. The World in the Model: How Economists Work and Think. Cambridge: Cambridge University Press.

von Neumann J. 1951. ‘The general and logical theory of automata’. In: Jeffress L. A. (ed.) Cerebral Mechanisms of Behavior. The Hixon Symposium. New York: John Wiley & Sons, 1–41.

O’Sullivan S. 2012. ‘The sacred and the obscure: Greek in the Carolingian reception of Martianus Capella’. In: The Journal of Medieval Latin 22, 67–94.

Pratt V. 1987. Thinking Machines: The Evolution of Artificial Intelligence. Oxford: Basil Blackwell.

Richards I. A. 1924. Principles of Literary Criticism. London: Routledge & Kegan Paul.

Riskin J. 2016. The Restless Clock: A History of the Centuries-Long Argument over What Makes Living Things Tick. Chicago IL: University of Chicago Press.

Rorty R. 2004. ‘Being that can be understood is language’. In: Krajewski B. (ed.) Gadamer’s Repercussions: Reconsidering Philosophical Hermeneutics. Berkeley CA: University of California Press, 21–29.

Rouse M. A. and Rouse R. H. 1991. ‘The development of research tools in the the thirteenth century’. In: Authentic Witnesses: Approaches to Medieval Texts and Manuscripts. Notre Dame IN: Notre Dame University Press, 221–55.

Rouse R. H. and Rouse M. A. 1974. ‘The verbal concordance to the Scriptures’. In: Archivum Fratrum Praedicatorum 44, 5–30.

Scarry E. 1992. ‘The made-up and the made-real’. In: Yale Journal of Criticism 5:2, 239–49.

Schönberg A. 1963. Schönberg’s ‘Moses and Aaron’. Hamburger P. (trans.) and Wörner K. H. (ed.). London: Faber and Faber.

Scofield C. I. (ed.) 1917. Old Scofield Study Bible. Oxford: Oxford University Press.

Shami J. 2007. ‘New manuscript texts of sermons by John Donne’. In: English Manuscript Studies 1100–1700 15, 77–119.

Simon H. A. 1960. The New Science of Management Decision. New York: Harper & Row.

Smith P. H. Jr. 1967. ‘The computer and the humanist’. In: Bowles E. A. (ed.) Computers in Humanistic Research: Readings and Perspectives. Englewood Cliffs NJ: Prentice-Hall, 16–28.

Turing A. 1950. ‘Computing machinery and intelligence’. In: Mind 49:236, 433–60.

Vermeule E. 1996. ‘Archaeology and philosophy: the dirt and the word’. Presidential Address 1995. In: Transactions of the American Philological Association 126, 1–10.

Wall J. N. 2016. ‘Gazing into imaginary spaces: digital modeling and the representation of reality’. In: New Technologies in Medieval and Renaissance Studies 6, 283–317.

Wall J. N. 2014. ‘Transforming the object of our study: the early modern sermon and the Virtual Paul’s Cross Project’. In: Journal of Digital Humanities 3:1. http://journalofdigitalhumanities.org/3-1/transforming-the-object-of-our-study-by-john-n-wall/.

Warner M. 2017. ‘Diary’. In: London Review of Books 39:22 (16 November), 37–39.

Wilson R. and Watkins J. J. (eds) 2013. Combinatorics Ancient and Modern. Oxford: Oxford University Press.

Zuboff, S. 2019. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: Public Affairs.